Artificial intelligence is a branch of computer science that aims to create systems capable of performing tasks that normally require human intelligence, such as reasoning, interpreting or decision-making. Through techniques such as machine learning and natural language processing. By combining mathematics, computer science and linguistics, AI is revolutionizing entire sectors, from healthcare to finance and digital creation. But what’s really behind this new concept that’s become a must-have?

How can a computer understand and respond to me?

For decades, the idea of a computer capable of speaking and understanding our language has seemed the stuff of science fiction. Today, however, this vision has become a reality, thanks to spectacular advances in computer science and natural language processing (NLP).

When we talk about natural language, we’re talking about the way we humans communicate: nuanced sentences full of ambiguity, emotion and sometimes even contradiction. For a computer to grasp this complex language, it’s not enough simply to program a dictionary. It has to learn to think like us, or at least to recognize the grammatical structures, cultural contexts and intentions behind each word.

Recent models such as Gemini or ChatGPT use billions of text data points to learn how to respond in a natural, contextual way.

Of course, computers’ understanding of human language is not perfect. Cultural nuances, idiomatic expressions and even humor remain obstacles.

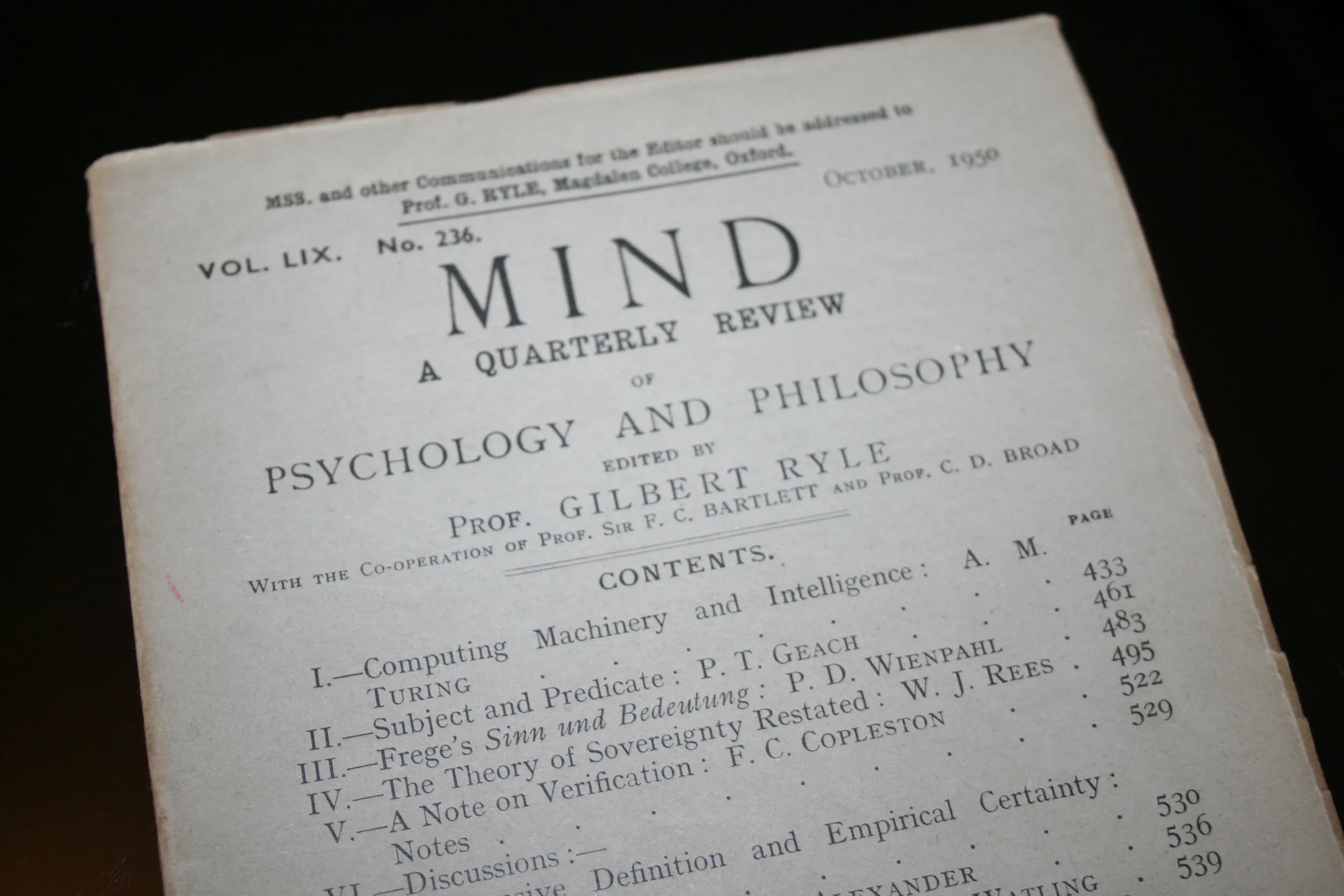

1950: An experimental test and the discovery of the first artificial intelligence

The history of computers capable of understanding natural human language dates back to the 1950s, when British mathematician Alan Turing published an article entitled Computing Machinery and Intelligence, in which he proposed the famous “Turing test”. This test aims to determine whether a machine can exhibit intelligent behavior indistinguishable from that of a human by assessing its ability to engage in conversation in natural language.

This idea inspired many researchers, including Joseph Weizenbaum, who developed the ELIZA program at MIT in 1966. ELIZA simulates a conversation by adopting the role of a Rogerian therapist, responding to users by rephrasing their statements in the form of questions. Although based on simple pattern-matching rules, ELIZA demonstrates the feasibility of human-machine interaction in natural language, arousing both fascination and debate about the limits of real understanding by machines.

2011: Google Brain for online search

In 2011, Google launched the Google Brain project, an ambitious initiative to explore the potential of artificial intelligence (AI) and deep neural networks. The project, which began as a collaboration between Google and Stanford researchers, quickly evolved into a central pillar of technological innovation at Google.

From the outset, Google Brain has had a significant impact on one of the company’s flagship products: its search engine.

The main aim of Google Brain was to push back the boundaries of technology by combining deep learning with Google’s massive computing power. The idea was to use algorithms inspired by the human brain to analyze, understand and interpret data on an unprecedented scale.

By applying this technology to the search engine, Google aimed to improve the relevance of results and understand users’ intentions, even when their queries were ambiguous or poorly formulated.

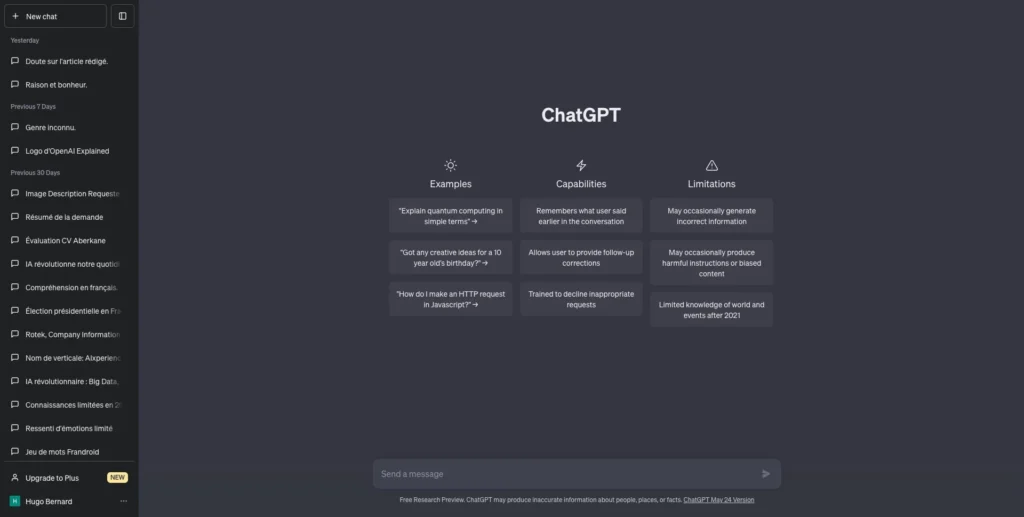

2022: ChatGPT, conversational artificial intelligence by OpenAI

ChatGPT, developed by OpenAI, is an advanced artificial intelligence model designed to interact with users via natural conversations. Based on GPT (Generative Pre-trained Transformer) technology, it is capable of understanding and generating text in many languages and on a multitude of subjects.

ChatGPT excels in a variety of areas: writing articles, creating marketing content, providing technical assistance, explaining complex concepts, and even acting as a computer code wizard. Its ability to adapt to the tone and style required makes it a particularly useful tool for professionals, students and content creators.

The model is based on deep learning, trained on huge quantities of textual data. Thanks to its pre-training and fine-tuning mechanisms, it can provide precise, coherent and often contextually relevant answers. This enables it to simulate a near-human understanding of dialogues.

ChatGPT was born out of OpenAI’s extensive research into language models. OpenAI, founded in 2015 by the likes of Elon Musk and Sam Altman had a mission to democratize access to artificial intelligence while ensuring its ethical development.

Generative Pre-trained Transformer: How does AI work?

Generative Pre-trained Transformer (GPT) models are based on the Transformer architecture first introduced in the article “Attention Is All You Need” (Vaswani et al., 2017). GPTs are then considered deep neural networks capable of analyzing and generating natural language on a large scale. They are called pre-trained because they are first exposed to huge amounts of text (this is the pre-training phase) and then they can be specialized or tuned for various tasks (this is the fine-tuning phase).

A little background: where did the idea for the Transformer come from?

Let’s go back a few years. Before Transformers, natural language processing relied on more traditional methods such as recurrent networks (RNN, LSTM) or statistical approaches. These techniques worked, but struggled when it came to understanding the context of long sentences. The longer the sentence, the harder it was for the AI to keep track. The result was a certain difficulty in processing complex texts.

Instead of processing words one by one in sequence, the Transformer uses anattention mechanism that enables it to focus on all parts of a sentence simultaneously. This makes it much easier to understand and manipulate long sequences of text, without the machine getting lost along the way. This breakthrough has changed the game, paving the way for more powerful and versatile models.

The deep end: swallowing gigatons of text

When we talk about the training (or pre-training) phase for a GPT, we’re describing a process in which the AI will sift through billions of words. Digital books, blog articles, websites… in short, anything accessible in text format. The aim? To learn the rules of the language, from basic grammar to stylistic subtleties, without having to give it the slightest “correct/incorrect” label. This is what we call self-supervision.

The principle is simple: at each step, GPT will try to guess the next word in a sentence, just based on the previous context. Think of it as a gigantic guessing game, with the model refining its statistics through successive predictions. Little by little, it perfects its knowledge of the language, understands the correlation between words and, in the process, gathers a wealth of information about the world (which it sometimes spits out unfiltered, by the way!).

Fine-tuning: specialization

Once GPT has digested immense quantities of text, it is already capable of producing coherent content. But we don’t stop there. There’s another “fine-tuning” stage, during which we specialize the model. For example, if we want a medical assistance chatbot, we’ll fine-tune it with verified health data, so that it responds accurately and cautiously.

This polishing can also be achieved through Reinforcement Learning with Human Feedback (RLHF), in which potential responses are shown to human evaluators. The latter rate the results, and the AI learns to match human expectations as closely as possible.