As games become increasingly memory-hungry, a technological breakthrough from NVIDIA and Microsoft could change all that. Thanks to artificial intelligence and a new method of texture compression, some tests have claimed memory savings of up to 90%. A revolution that could prolong the life of many graphics cards.

The end of VRAM shortages? NVIDIA and DirectX reduce memory usage by up to 90% thanks to AI

- VRAM saturation: why your graphics card slows down during gaming

- Neural Texture Compression: how AI reduces VRAM by up to 90%.

- NTC, Cooperative Vectors and DirectX 12: how everything works together

VRAM saturation: why your graphics card slows down during gaming

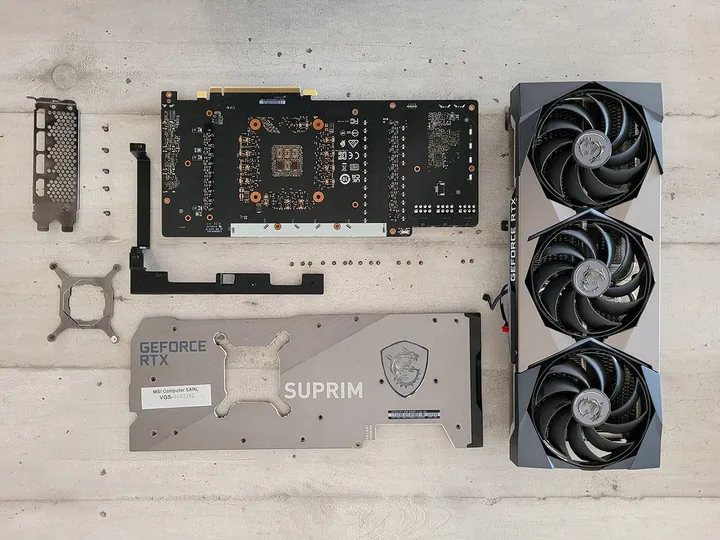

VRAM (Video RAM) is the memory dedicated to the graphics card. It stores all the elements required to display a scene: textures, shadows, lighting effects, rendering buffers, etc. The more graphically complex a game, the more numerous and voluminous these elements are, particularly in high-resolution versions such as 1440p or 4K.

In a modern game, high-definition textures alone can take up several gigabytes. The addition of ultra-detailed mods, ray tracing effects or AI algorithms for rendering further accentuates this load. As a result, even a card with 8 GB VRAM can quickly reach its limits, especially on recent AAA titles.

When VRAM is saturated, the graphics card has to draw on the much slower conventional RAM memory. This leads to FPS drops, blurred textures, slowdowns and even crashes. This is an increasingly common phenomenon, and one of the reasons why AI compression solutions are at the heart of today’s graphics innovations.

Neural Texture Compression: how AI reduces VRAM by up to 90%.

The Neural Texture Compression (NTC) technology developed by NVIDIA marks a major advance in graphics memory management. It is based on a simple but highly effective principle: using artificial intelligence to compress video game textures, while preserving their visual quality. This approach, unprecedented in the field of real-time rendering, is part of a wider strategy to reduce VRAM consumption.

In concrete terms, NTC uses neural networks trained to recognize the graphic patterns typical of game textures. These are then optimally compressed and decompressed on the fly by the GPU when rendering. This process takes place in real time, without player intervention, and with minimal impact on performance. Thanks to the power of the latest RTX cards, this neural decompression remains almost imperceptible, while massively lightening the memory load.

Initial tests are impressive: a 98 MB texture can be reduced to just 11 MB, a saving of almost 90%. This gain enables games to use more high-definition textures without exceeding the VRAM limit, which is crucial for 8 GB GPUs, often considered “obsolete” on recent titles.

| Features | Classic texture | Compressed texture (NTC) |

|---|---|---|

| Sample file size | 98 MB | 11 Mb |

| Loading time | Standard | Slightly longer |

| Impact on visual quality | No compression | Visually identical |

| VRAM consumption | High | Up to -90 |

| GPU processing | Direct | On-the-fly AI decompression |

NTC, Cooperative Vectors and DirectX 12: how everything works together

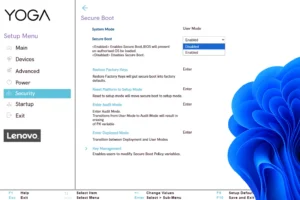

The effectiveness of neural texture compression (NTC) is also supported by technical advances in DirectX 12 Ultimate ‘s graphics APIs and its support for Raytracing 1.2. The latter introduces Cooperative Vectors, a mechanism that allows shaders to work in a more coordinated way on complex operations, such as on-the-fly neural decompression.

In the case of NTC, these Cooperative Vectors ensure that compressed data is processed efficiently by the GPU, minimizing latency and inconsistency during rendering. It is this intelligent, parallel processing that enables image quality equivalent to that of uncompressed textures to be achieved, while reducing memory requirements by a factor of 5 to 10. Without this optimized software layer, AI alone would not be sufficient to guarantee smooth performance.

To take advantage of this technology, however, certain conditions must be met. It requires an RTX 4000 series graphics card or higher, with full support for DirectX 12 Ultimate. Older GPUs lack specific units and support for Cooperative Vectors, which limits access to this innovation. It’s a material investment, certainly, but one that paves the way for much more intelligent memory management in the years to come.