Artificial intelligence is gradually moving out of the cloud and directly onto our computers. Thanks to new software designed for Windows, it is now possible to run a complete AI without an Internet connection, with the same ease as an online service. This evolution redefines our relationship with technology, combining performance, confidentiality and autonomy. The personal computer thus regains its full meaning, once again becoming the center of intelligence.

The best local AI software and alternatives to ChatGPT for Windows

- What is local AI?

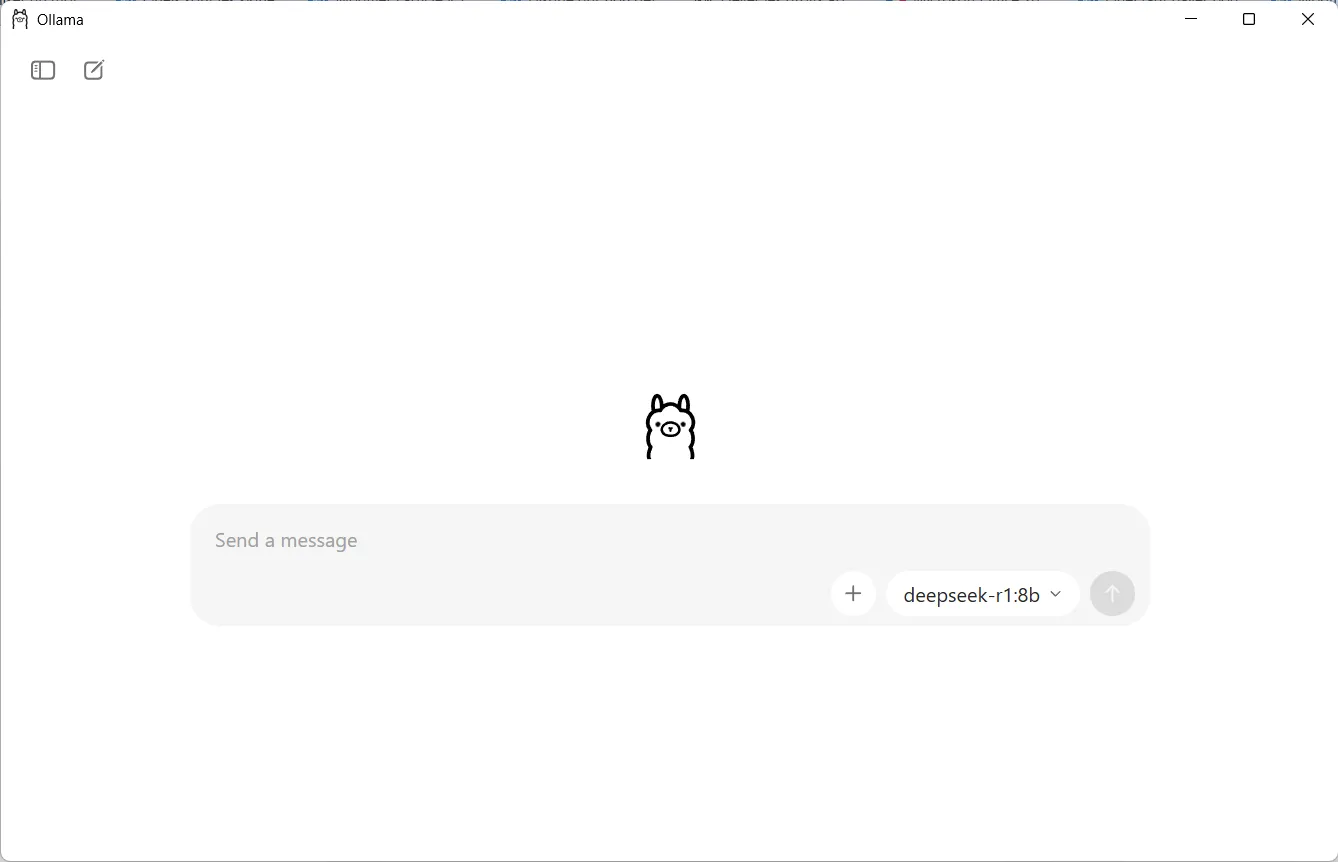

- 1. Ollama: running AI models locally

- 2. Jan AI: The local application that turns your PC into an intelligent assistant

- 3. LM Studio: a local alternative to online AI services

- Where can I download local AI templates for Windows?

- The best AI models for mid-range PCs

What is local AI?

A local AI is software that can run directly on your computer, without the need for a remote server. Unlike online services like ChatGPT, which process your requests in the cloud, a local AI performs all the calculations on your own processor or graphics card. This makes it totally independent of the Internet, offering a more private and fully controlled experience.

This approach profoundly changes the way we interact with artificial intelligence. Exchanges no longer pass through external servers, eliminating the risks associated with confidentiality and service interruptions. The cost of use becomes zero once the model is installed, and latency is imperceptible since responses are generated in real time by the user’s hardware.

However, this autonomy has its constraints. The models must be adapted to the machine’s capabilities, their size is limited by the available memory, and installation sometimes requires a few technical adjustments. Once configured, however, local AI becomes a powerful and stable tool, capable of competing with online solutions while offering great freedom.

1. Ollama: running AI models locally

Ollama is an open-source platform designed to run language models (LLMs) directly on a computer, without dependency on the cloud. Compatible with Windows, macOS and Linux, it enables models such as Llama 3, Mistral or Phi-3 to be downloaded and used locally, while offering a graphical and command-line interface as well as a local API compatible with OpenAI. Thanks to its modular approach, Ollama automatically manages the downloading, quantification and launching of models, making it much easier to set up a private, autonomous and secure AI environment.

For technical users, Ollama integrates into existing workflows of local applications, command-line tools or API servers. Execution relies on the processor or GPU to accelerate generation, and compatibility with quantized models (GGUF formats) means good performance can be achieved even on machines equipped with modest graphics cards. This makes Ollama a lightweight, high-performance solution for harnessing the power of modern AI models, while retaining full control over data and hardware.

Follow my guide to using ChatGPT-OSS locally on Windows 11 with Ollama.

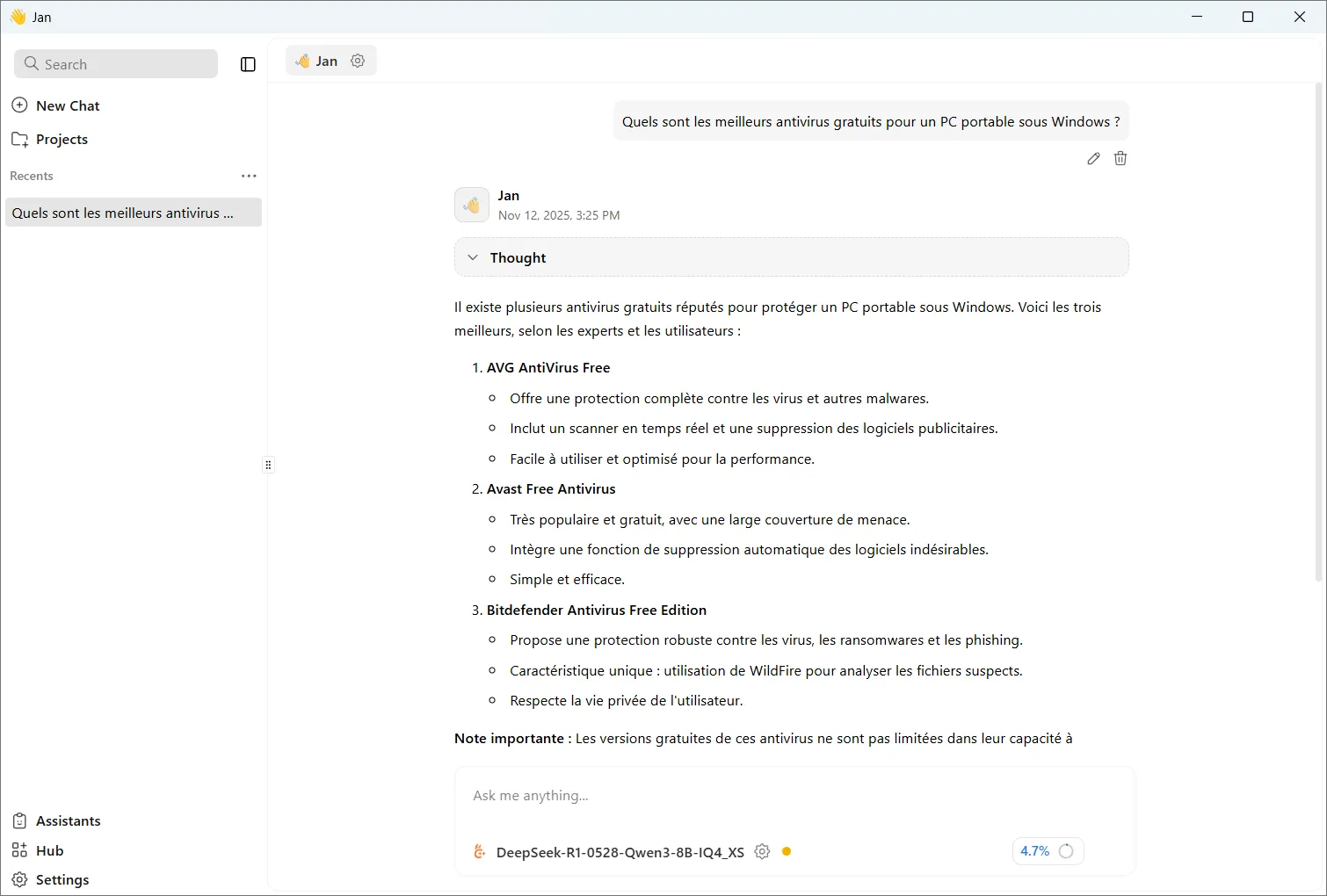

2. Jan AI: The local application that turns your PC into an intelligent assistant

Jan AI is an open-source application designed to enable anyone to run artificial intelligence directly on their own computer. Unlike online services, it is not dependent on any cloud and operates entirely locally, guaranteeing the confidentiality of exchanges. Its neat interface lets you download, manage and use advanced language models such as Llama, Mistral or Qwen, without going through the command line. In just a few minutes, a simple PC becomes a real workstation, capable of generating text, analyzing data or assisting with editing, while retaining control over the resources and information processed.

Behind its apparent simplicity, Jan AI incorporates a number of advanced features, such as support for quantized models (GGUF) to optimize memory, the ability to switch between local and cloud modes as required, and experimental support for the RAG protocol to interact with documents. The application takes advantage of the processor or GPU to accelerate processing, adapting equally well to laptops and workstations equipped with NVIDIA or AMD cards. By combining portability, confidentiality and flexibility, Jan AI stands out as an alternative to cloud solutions, capable of running high-performance AI directly from the desktop.

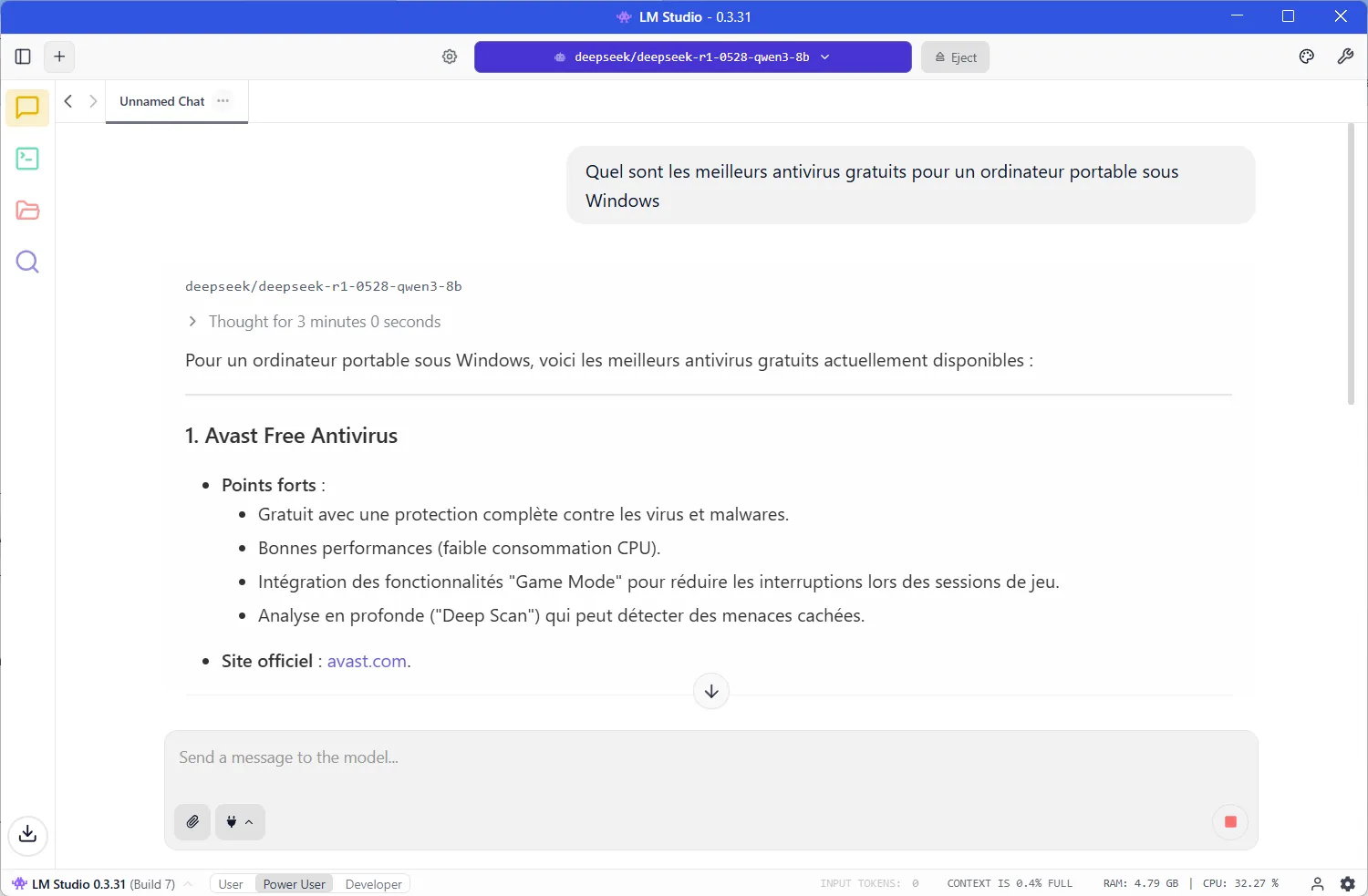

3. LM Studio: a local alternative to online AI services

LM Studio is a modern application that lets you run artificial intelligence models directly on your computer, without relying on an online service. Compatible with Windows, macOS and Linux, it offers an interface that makes the use of models such as Llama 3, Gemma or Mistral accessible to all, even without technical knowledge. Users can search, download and test templates in just a few clicks, while benefiting from entirely local, fast and privacy-friendly operation. LM Studio also features an OpenAI-compatible API server, enabling local templates to be used with any application or development environment.

LM Studio adapts to both consumer computers and more advanced workstations. The application exploits both the processor and the graphics card to deliver smooth execution with models of billions of parameters. Thanks to this approach, it transforms any PC into a veritable personal AI laboratory, capable of answering complex queries, writing texts or processing data without ever going through the cloud. LM Studio is an independent solution for exploring the potential of artificial intelligence models.

Discover my tutorial on how to install and use LM Studio with DeepSeek R1 on Windows.

Where can I download local AI templates for Windows?

To make full use of local artificial intelligence software, you first need to download the model that will serve as your assistant’s brain. These models, known as LLMs (Large Language Models), come in several versions, depending on their size, complexity and the resources required to run them. Fortunately, the three main solutions(Ollama, LM Studio and Jan AI) each include their own library of models.

- Ollama has an official catalog directly accessible from ollama.com/library. Here you’ll find the most popular models, such as Llama 3, Phi-3, Mistral and Gemma, all ready to be downloaded and launched locally.

- LM Studio’s Discover tab lets you search and install templates from Hugging Face and other open source repositories with a single click.

- Jan AI takes a similar approach with its Hub interface, where recommended models adapt to the power of your machine to avoid slowdowns and loading errors.

In addition to the built-in libraries, open source templates can be downloaded from platforms such as Hugging Face, GitHub and certain specialized communities. These templates are distributed as GGUF files, a format optimized for local execution.

The best AI models for mid-range PCs

It’s perfectly possible to run local AI on a mid-range PC, provided you choose the right model. Contrary to popular belief, you don’t need an expensive GPU or an oversized machine to benefit from AI on a daily basis. Modern models such as DeepSeek 8B or Mistral 7B have been designed to run on affordable configurations, while offering remarkable, fast response quality.

| Model | Size | Highlights | Recommended format | Compatibility | Remarks |

|---|---|---|---|---|---|

| DeepSeek 8B | 8 B | Excellent French and reasoning skills | GGUF Q4_K_M | Ollama, LM Studio | Ideal for small configurations |

| Mistral 7B | 7 B | Versatile, natural response | Q4_K_M | Ollama, LM Studio, Jan AI | Excellent balance between speed and quality |

| Llama 3 8B | 8 B | Advanced reasoning, consistency in long answers | Q4_K_M | Ollama, LM Studio | Very reliable |

| Phi-3 Mini (3.8B) | 3.8 B | Fast, light, ideal for CPU | Q4_0 | Ollama, Jan AI | For simple tasks |

Quantified templates in GGUF Q4_K_M or Q5_K_S format are particularly recommended, as they reduce memory load without compromising response quality. Whether you’re writing, summarizing, coding or simply chatting, these templates offer a fast experience comparable to cloud solutions, but entirely local.